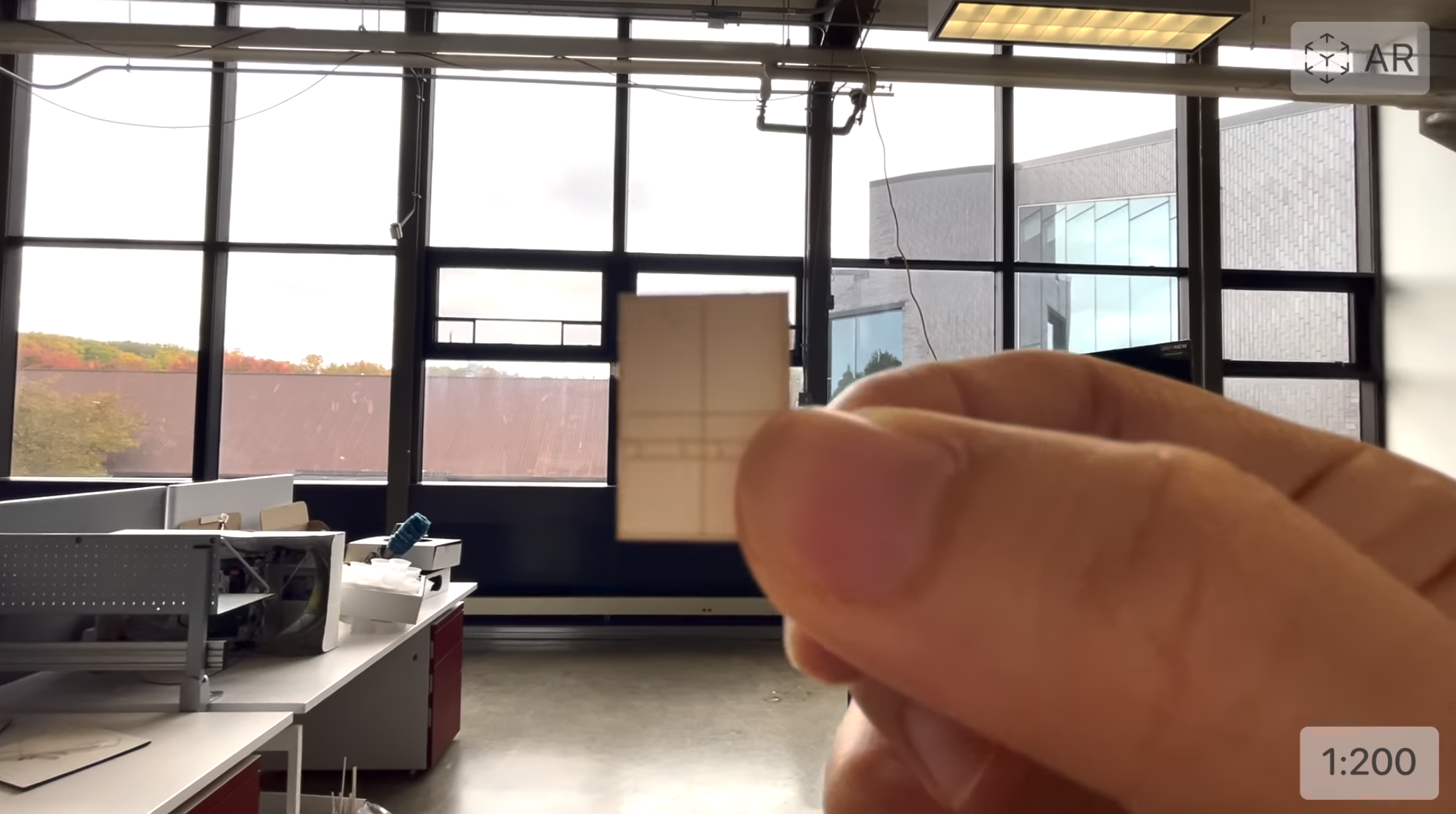

Building models in AR

How can AR interaction be a new design tool for architectural designers?

My role

AR design prototyper

I started with identifying the problem and goal, followed by conducting the contextual inquiry. Next, I sketched out the ideation and framed the scope by thinking about the potential constraints and essential functions. Moving forward, I conducted a competitive analysis and built a paper prototype and a lo-fi prototype to test out ideas.

Duration

Oct 2021 - Nov 2021 (1 mo)

Tools

Lens Studio, Museum cardboard, Laser cutter, Adobe Suite (Premiere, After Effects, Photoshop, Illustrator)

Key points

Conduct the problem statement, competitive analysis, and scoping of the project.

Ship the design from sketching, and lo-fi prototype to hi-fi prototype with takeaways that came up along.

Create a demo video using Lens Studio’s AR filter to deliver the design.

Demo

Problem statement and Goal

How can AR interaction be a new design tool for architectural designers?

Tools help design. While we sketch with pencils, we can color with crayons. While we create gradients with watercolor, we can create texture in oil paintings. Now, it is time to embrace the virtual world, and I believe a new tool will again facilitate, creating the next fascinating design.

The new tool I am thinking of is augmented reality. While architecture is the spatial design in the physical world, virtual reality is the spatial design in the digital world. Augmented reality can be a bridge between the physical and digital worlds, and the common thinking of space design can be implemented into the new tool to help both fields.

-> Use AR to advance the architecture design process

Ideation

Users can import new objects to the projected virtual model by grabbing the physical content from the world space.

When users use hand gestures to extrude the physical wall, AR can show the render of the result to experience.

Scope framing

Possible Constraints

Hard to distinguish between physical and virtual contents

Hard to create an intuitive hand gesture for both physical and virtual contents

Hard to track and work on a changing scene

Essential function

Import virtual contents to the projected 3D models from the physical contents

Modify the physical space by hand gesture and view the render in AR

Annotate on the world space in AR

Competitive analysis

| View in the world space | Tools in AR | Tools in VR | Recognize physical contents | Interact with physical contents | |

|---|---|---|---|---|---|

| Morpholio Trace | O | △ | △ | X | X |

| Arkio | O | O | O | △ | X |

| magicplan | X | O | △ | O | △ |

Design process + Takeaways

Lo-fi paper prototype phase 1

Takeaways

Too many buttons on the hand-tracking menu.

-> Simplify the menu and enlarge the buttons

Hard technically to identify physical content by framing with clicks.

-> Select the physical item by drawing with brushes

Filming with different applications and devices caused color inconsistencies.

-> Use the same application while prototyping

Lo-fi prototype phase 2

Takeaways

Hard to make the gesture right to be detected.

-> Simplify the gesture thinking about user’s body

Hard to accurately manipulate the content and add content

-> Add more tools to support instead of only using hands

Users are not sure what to do next.

-> Add more hints and tooltips.

Core Interactions

Implementation

Physical model: Museum cardboard and Laser cutting

Marker: Adobe Illustrator

Icons: Adobe Illustrator

Video editing: Adobe premiere and after effects

User interface: Adobe XD

AR filter: Lens Studio

Image tracking - project marker-based 3D models

Hand tracking - hand menu and cursor

Object controller script - manipulate virtual contents

Clone - turn the physical content virtual

Speech recognition - voice typing

Behavior script - interaction

Next steps

Let multiple users collaborate synchronously.

Let users have more control over the virtual content.

Optimize the interaction to correctly selected the object in the physical world.

Challenges and learning

At first, I thought my features might be unrealistic due to the limited techniques. However, after getting advice during office hours and browsing online, the existing sources blew my mind. Among all the sources, I found three topics especially interesting. Also, while trying these, I realized that light and the detection factor are critical.

The first topic is persistent AR, placing a digital layer on the world space, allowing developers to locate the virtual content or data in the real world. The second one is machine learning and physical object detector. Amazingly, Google has provided a machine-learning template for users to train the model and use it in Lens Studio. The third one is a clone. Fritz AI is a talented lens creator, and I found he is using AI to copy and paste 2D and 3D objects from the world space to virtual space.

I found that color is hard to detect, and we should avoid having the symmetry marker. I’ve tried a gradient color one and a big, bold M with a thick frame, but they did not work well. Another thing I realized is that when I was trying my prototype, my hand’s shadow seriously affected the detection.

All in all, I learn a lot in the prototyping process. I am glad to hone my prototyping skills and found so many sources to learn.